Earlier this month, UPMC Enterprises FLIGHT team hosted a breakfast discussion focused on the impact of bias in AI and also in the workplace.

The morning was kicked off by Cassandra Cooper, Manager of Diversity Learning, from UPMC’s Center for Engagement and Inclusion. She introduced the topic of unconscious bias as the thoughts, feelings, and beliefs that we are unaware of, which influence the way we interact with the world and more importantly each other. Unconscious bias can be favorable or unfavorable, and while none one is immune to bias, with awareness it can be managed.

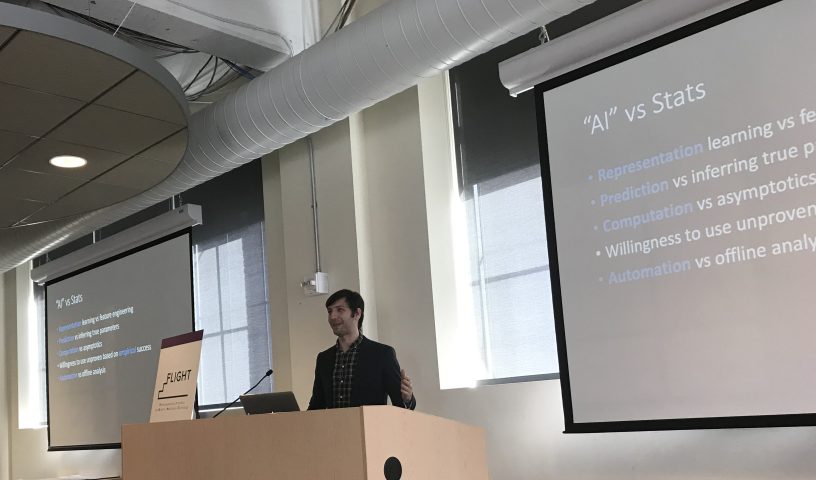

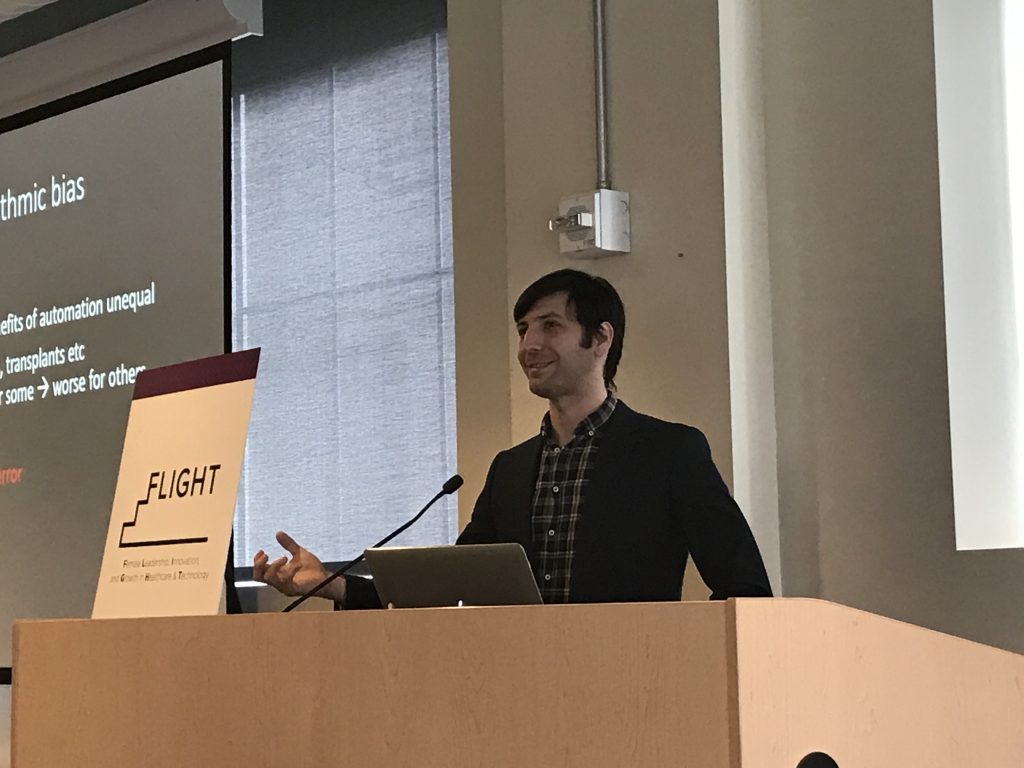

After Cassandra’s introduction on bias, the event transitioned into the keynote presentation on the Social Impacts of Algorithmic Decision-Making, by Zachary Lipton, PhD, assistant professor at Carnegie Mellon University (CMU).

Dr. Lipton began his presentation by noting that there are many limitations with current definitions of AI. Since AI has to cover a broad range of topics within computer science, such as machine learning, evolutionary algorithms, and statistical algorithms, it’s difficult to make sure AI isn’t defined too specifically or too generally. He stated that AI is best defined by Andrew Moore, Dean of the School of Computer Science at CMU, as the “science and engineering of making computers behave in ways that, until recently, we thought required human intelligence.”

As we create machines to make predictions and ultimately decisions, it’s critical to understand the downstream impact of these technologies, as they can often have ethical implications. This is especially important as we create models to try and guide processes such as employment decisions, resume screening, and more.

The foundations of algorithmic bias can include but aren’t limited to, underrepresented groups, all features being correlated, and measurement error.

“If there happens to be some pre-existing bias that makes it in as the label, then this becomes a really serious problem,” Dr. Lipton explained as he discussed algorithm creation. He used an example of the Civil Rights Act in 1964. If an algorithm was created prior to the Civil Rights movement, it effectively would have to be re-written to comply with modern-day ethics. Dr. Lipton then posed the question, “At what point should we leverage computers for these issues versus handling them ourselves to ensure fairness?”

By automating processes and decisions through AI and machine learning, it has the potential to create ways to discriminate at a large scale if bias is introduced.

“These issues are real and important,” Dr. Lipton said. “They don’t go away with technology…so these are the things that we have to continue to reevaluate, periodically or every day…and think about where we should be inserting technology, where our data comes from, and what we are trying to predict. If anything, this requires more critical thinking, not less.”

Bringing the discussion full circle, Cooper along with Becky Orenstein, Diversity Learning Consultant from UPMC’s Center for Engagement and Inclusion, spoke to the human element of bias.

Whether we are working with algorithms to automate predictions and decision making, or interacting with a colleague in the office, unconscious bias has the ability to impact the way that we work and consequently the work that we produce.

By making an effort to recognize our unconscious biases, we can begin to create a more inclusive and culturally competent environment for everyone.

Orenstein ended the presentation with some food for thought – “How can we make our unconscious biases conscious? How can we work to recognize and manage this?”